I've been spending a lot of time lately coding unit tests. If I were writing tests for a class named Chainsaw, I would start with a blank class file, and modify til it looks something like this:

using System;

using System.Collections.Generic;

using System.Text;

using NUnit.Framework;

using Pedro.PowerTools;

namespace Pedro.PowerTools.UnitTests

{ [TestFixture]

public class ChainsawUnitTests

{ ChainsawAdapter myAdapter;

[TestFixtureSetUp]

public void FixtureSetUp()

{ myAdapter = new ChainsawAdapter();

}

}

}

If you were to compare all of the TestFixtures in the assembly, you would find:

- For the most part, I have the same 'using' statements in each

- They all have the same namespace

- The class name for each TestFixture is the class to test, followed by "UnitTests"

- The data adapter name is the class to test, followed by "Adapter"

*Note - Yes, I'm testing several layers at once. Call it efficient. Call it lazy. It's simply my preference.

Though this isn't difficult to create by hand, there's an easier way - Item Templates. What I want is a way to right-click on my Project, choose Add > New Item, pick "MyUnitTests" from the list, and have it magically create the basic code. Turns out it's quite simple.

Start by placing the above code in a .cs file. Choose File > Export Template... from the menu. In the Choose Template Type screen, select "Item template" and the project where the .cs file exists.

Click Next. In the Select Item To Export screen, check the box beside the .cs file (ChainsawUnitTests.cs in my case.)

Click Next. Under Select Item References, check the box for nunit.framework. Ignore the warning.

Click Next. On the Select Template Options screen, enter the Template name and description. Make sure the box to "Display an explorer window..." is checked, and click Finish.

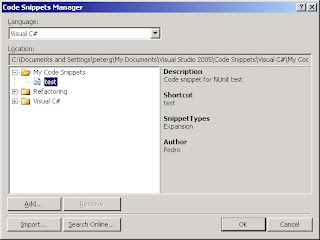

Once the template is generated, it will be placed in a .zip with the name of the template (for me, this is MyUnitTests.zip.) This is a decent start, but we need to modify a few things.

Open the .zip, and you should see the following files:

- _TemplateIcon.ico

- ChainsawUnitTests.cs

- MyTemplate.vstemplate

Open MyTemplate.vstemplate. Near the end of the file, you should see the following <ProjectItem>

<ProjectItem SubType="Code" TargetFileName="$fileinputname$.cs" ReplaceParameters="true">ChainsawUnitTests.cs</ProjectItem>

In VS2005, when you choose to create a new item for a project, it asks for a filename. This filename, minus the extension, is placed in $fileinputname$. For this template, however, I want to type in the class to test, and have it generate a filename using the classname, followed by "UnitTests.cs". So let's change the line to

<ProjectItem SubType="Code" TargetFileName="$fileinputname$UnitTests.cs" ReplaceParameters="true">ChainsawUnitTests.cs</ProjectItem>

Save the file, and let's open ChainsawUnitTests.cs. It looks nearly identical to the original

namespace $rootnamespace$

{ [TestFixture]

public class $safeitemname$

{ ChainsawAdapter myAdapter;

[TestFixtureSetUp]

public void FixtureSetUp()

{ myAdapter = new ChainsawAdapter();

}

}

}

In fact, the only changes made were to the namespace and classname. Earlier, I mentioned wanting to type in the class to be tested, as opposed to the unit test class, when adding an item through the wizard. This is because I want to replace "Chainsaw" in each instance of "ChainsawAdapter" with the class I'm testing. As you may have already guessed, this comes from $fileinputname$. Two replacements and we have the following:

using System;

using System.Collections.Generic;

using System.Text;

using NUnit.Framework;

using Pedro.PowerTools;

namespace $rootnamespace$

{ [TestFixture]

public class $safeitemname$

{ $fileinputname$Adapter myAdapter;

[TestFixtureSetUp]

public void FixtureSetUp()

{ myAdapter = new $fileinputname$Adapter();

}

}

}

Save the changes and re-zip the files. Drop the .zip in your custom ItemTemplates folder (the location can be found and/or modified in the VS Options dialog, under "Projects and Solutions" > "General." Having done all that, go back to the test project in VS. Right-click on the project and choose Add > New Item. In the Templates dialog, you should see your new template near the bottom of the dialog. Enter "DrillPress.cs" into the Name textbox and click Add.

Assuming all went well, VS should generate DrillPressUnitTests.cs with the following content:

using System;

using System.Collections.Generic;

using System.Text;

using NUnit.Framework;

namespace test1

{ [TestFixture]

public class DrillPressUnitTests

{ DrillPressAdapter myAdapter;

[TestFixtureSetUp]

public void FixtureSetUp()

{ myAdapter = new DrillPressAdapter();

}

}

}